Object Fusion Tracking Based on Visible and Infrared Images Using Fully Convolutional Siamese Networks

Abstract

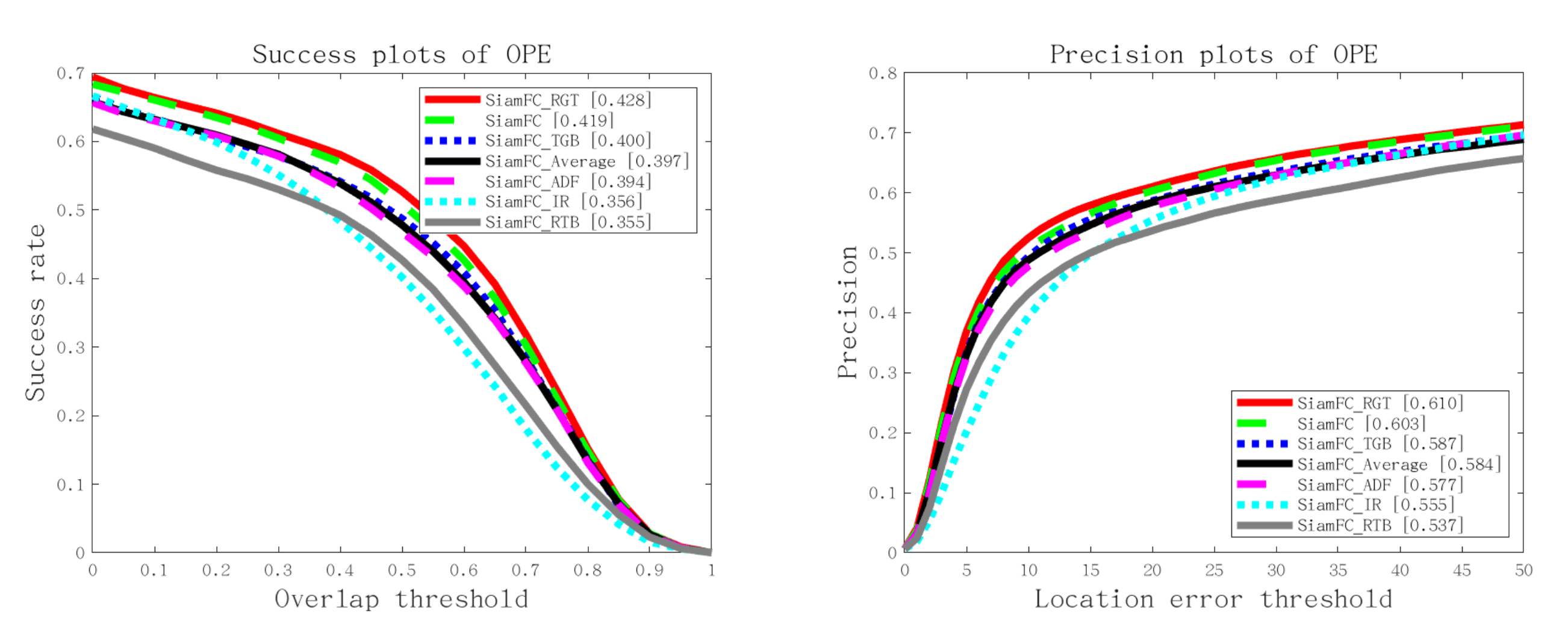

Visual tracking is of great importance and thus has attracted a lot of interests in recent years. However, tracking based on visible images may fail when visible images are not reliable, for example when the illumination conditions are poor or in foggy day. Infrared images reveal thermal information thus are insensitive to these factors. Due to their complementary features, object fusion tracking based on visible and infrared images has attracted great attention recently. In this paper, a pixel-level fusion tracking method based on fully convolutional Siamese Networks, which has shown great potential in RGB object tracking, is proposed. Visible and infrared images are firstly fused and then tracking is performed based on fused images. Extensive experiments on a large dataset which contains challenging scenarios have been conducted to evaluate tracking performances. The results clearly indicate that the proposed fusion tracking method can improve tracking performance compared to methods based on images of single modality.

BibTeX

@INPROCEEDINGS{9011253,

title={Object Fusion Tracking Based on Visible and Infrared Images Using Fully Convolutional Siamese Networks},

author={Zhang, Xingchen and Ye, Ping and Qiao, Dan and Zhao, Junhao and Peng, Shengyun and Xiao, Gang},

booktitle={2019 22th International Conference on Information Fusion (FUSION)},

year={2019},

pages={1-8},

}

Ping Ye

Ping Ye

Dan Qiao

Dan Qiao

Gang Xiao

Gang Xiao