UniTable: Towards a Unified Framework for Table Structure Recognition via Self-Supervised Pretraining

Abstract

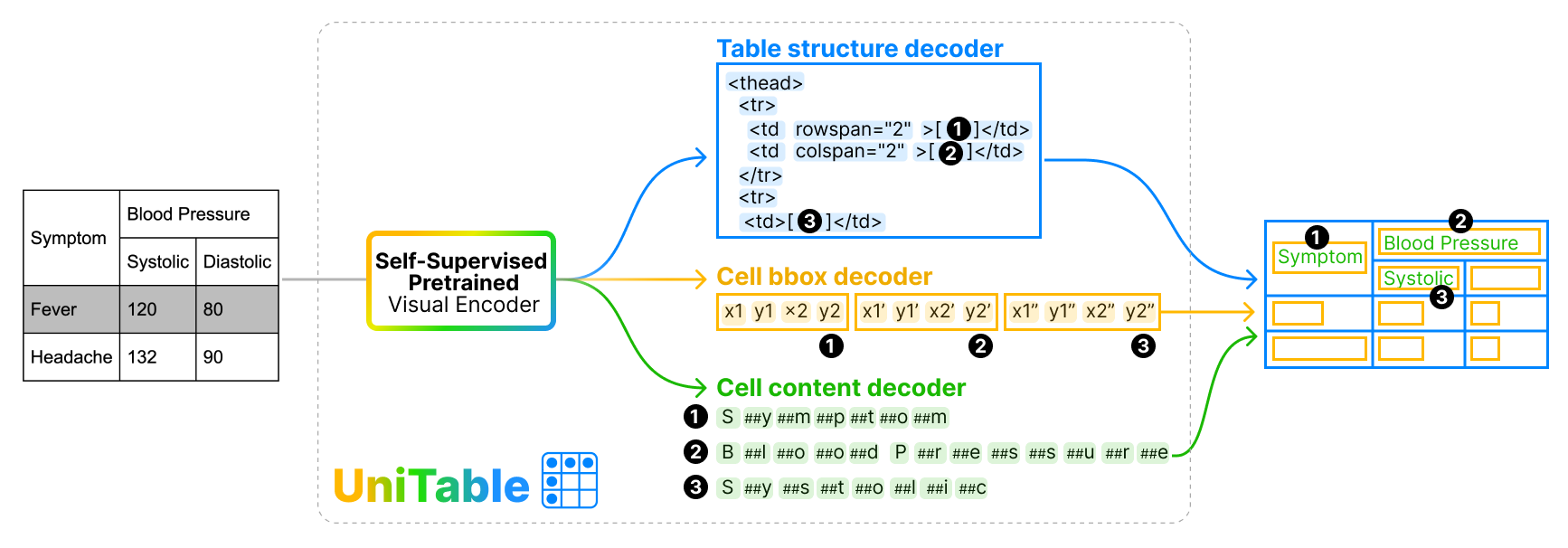

Tables convey factual and quantitative data with implicit conventions created by humans that are often challenging for machines to parse. Prior work on table structure recognition (TSR) has mainly centered around complex task-specific combinations of available inputs and tools. We present UniTable, a training framework that unifies both the training paradigm and training objective of TSR. Its training paradigm combines the simplicity of purely pixel-level inputs with the effectiveness and scalability empowered by self-supervised pretraining (SSP) from diverse unannotated tabular images. Our framework unifies the training objectives of all three TSR tasks — extracting table structure, cell content, and cell bounding box (bbox) — into a unified task-agnostic training objective: language modeling. Extensive quantitative and qualitative analyses highlight UniTable’s state-of-the-art (SOTA) performance on four of the largest TSR datasets. To promote reproducible research, enhance transparency, and SOTA innovations, we open-source our code at https://anonymous.4open.science/r/icmlreview/notebooks/full_pipeline.ipynb and release the first-of-its-kind Jupyter Notebook of the whole inference pipeline, fine-tuned across multiple TSR datasets, supporting all three TSR tasks.

BibTeX

@article{peng2024unitable,

title={UniTable: Towards a Unified Framework for Table Structure Recognition via Self-Supervised Pretraining},

author={Peng, ShengYun and Lee, Seongmin and Wang, Xiaojing and Balasubramaniyan, Rajarajeswari and Chau, Duen Horng},

journal={arXiv preprint arXiv:2403.04822},

year={2024}

}