Self-supervised Pre-training for Table Structure Recognition Transformer

Abstract

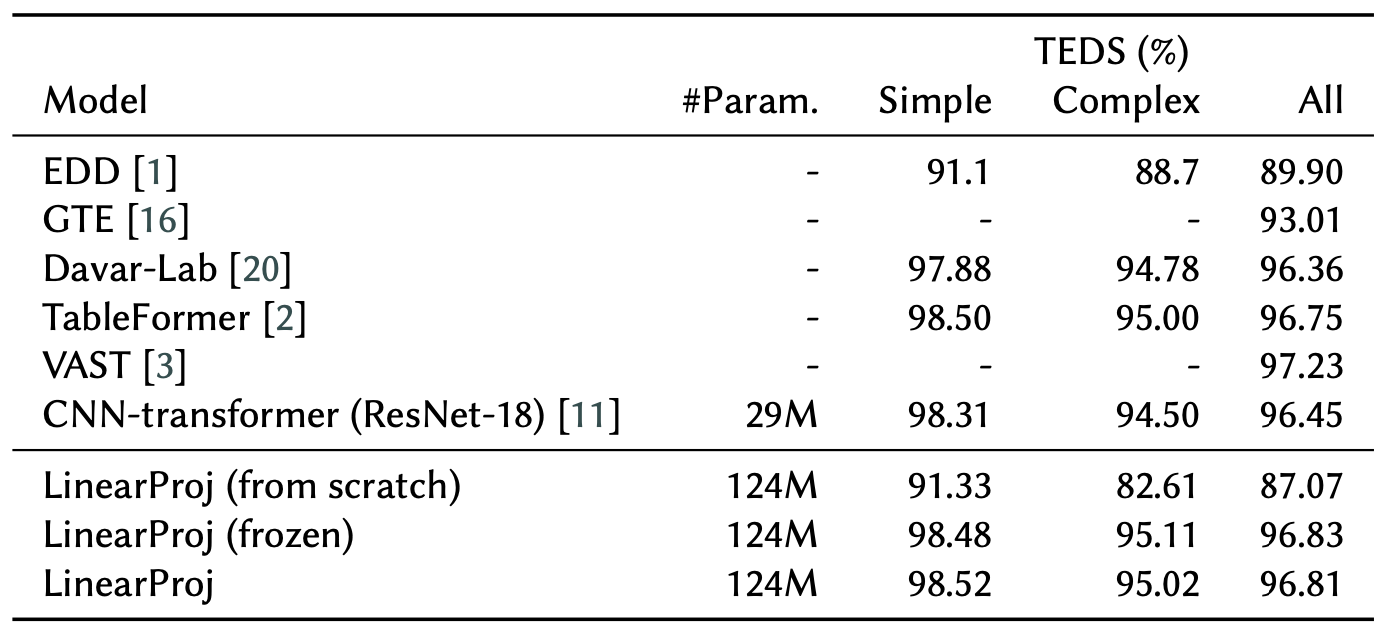

Table structure recognition (TSR) aims to convert tabular images into a machine-readable format. Although hybrid convolutional neural network (CNN)-transformer architecture is widely used in existing approaches, linear projection transformer has outperformed the hybrid architecture in numerous vision tasks due to its simplicity and efficiency. However, existing research has demonstrated that a direct replacement of CNN backbone with linear projection leads to a marked performance drop. In this work, we resolve the issue by proposing a self-supervised pre-training (SSP) method for TSR transformers. We discover that the performance gap between the linear projection transformer and the hybrid CNN-transformer can be mitigated by SSP of the visual encoder in the TSR model.

BibTeX

@article{peng2024self,

title={Self-supervised Pre-training for Table Structure Recognition Transformer},

author={ShengYun Peng, Seongmin Lee, Xiaojing Wang, Raji Balasubramaniyan, Duen Horng Chau},

year={2024}

}